- #Regress dictionary mod

- #Regress dictionary update

- #Regress dictionary Patch

- #Regress dictionary code

In both the methods the number of atoms K of D chosen were the same, equal to 441, and the number of iterations of the algorithm, denoted by i it, total was significantly large in the order of thousands, which is of concern if a time constraint were imposed.

#Regress dictionary code

While in, it was used to learn a dictionary from the input image to sparse code it and achieve compression by entropy encoding the sparse coefficients.

#Regress dictionary Patch

In K-SVD was used to learn a set of dictionaries, each from a particular patch in every image with all patches having different geometry, from facial images. Previous works in which K-SVD was used counts to a few and consist facial image compression and image compression using sparse dictionaries. K-SVD being one of the most used and recent, this work focuses on it.

#Regress dictionary update

Last in the list, K-SVD, relies on Singular Value Decomposition of an error matrix to obtain a simultaneous update for both d i and x i in every iteration unlike the previous methods and exhibit quick convergence. RLS-DLA being a modification of MOD, uses the same framework in a recursive manner, each time using a different subset of training signals, with a provision to adjust the amount of change made to initial D.

#Regress dictionary mod

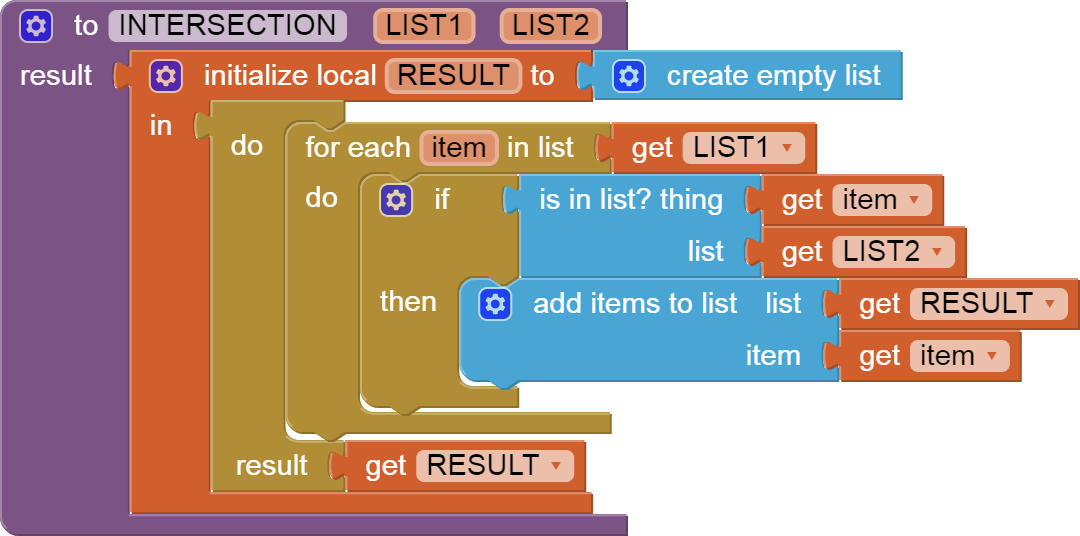

MOD algorithm, a successor of K-Means, provides a more efficient dictionary atom update equation in terms of ease of implementation and also can invoke algorithms to perform sparse coding. Then, in second step, it updates each d i by replacing it using a vector obtained by normalizing the vector sum of all y i in that set. K-Means algorithm, the least recent and seldom used, performs sparse coding (step 1) by dividing Y into K sets such that all y i in the same set have the same d i as the closest in terms of norm-2 distance. K-Means algorithm or Vector Quantization, Method of Optimal Directions (MOD), Recursive Least Square Dictionary Learning Algorithm (RLS-DLA) and K-Singular Value Decomposition (K-SVD), are some of the well known algorithms for dictionary learning. Second step involves the update of each column d i of the dictionary to better fit the training data by some method. In the first step, the algorithm performs the sparse coding of the training data Y using the available initial dictionary D initial or the updated one from previous iteration of the algorithm. Several novel dictionary learning algorithms had been introduced till now with all having a similar framework consisting two major steps. To learn or train D, it is a common practise to start with an overcomplete transform dictionary as the initial dictionary, say DCT, and then train it from a training set of signals. To obtain an X, which is more sparse, one uses overcomplete dictionaries where K ≫ n with increased sparsity attributed to the additional K − n degrees of freedom introduced in D.

It can be chosen as the target average mean square representation error in y i as given by Eq. ϵ is the perturbation error given as a constraint in sparse coding. (1) min X Σ i | | x i | | 0 subject to | | y i − Dx i | | 2 2 ≤ ϵwhere X ∈ R K × N, the sparse representation matrix, with its columns designated as x i which is the sparse vector corresponding y i. Then sparse representation problem can be mathematically viewed as solving Eq. Let D ∈ R n × K be the dictionary composed of atoms d i represented as D = . Let Y ∈ R n × N be the input signal matrix composed of N signals of dimensionality n represented by y i. The latter since possessing significant advantages, most of the works are based on such learned or trained dictionaries. The selection of dictionary containing the atoms or basis signals needs some addressing and two possible choices are, either to go for a fixed transform based dictionary or use a dictionary that is learned or adapted to the image content. To mention some are image compression, image denoising, image inpainting and image deblurring. This representation subjected to some perturbation error as tolerance, called the sparse code, finds application in a wide range of problems. The very notion of sparse representation suggest that every signal, that we operate on, irrespective of it being audio or image, can be represented as a linear combination of basis signals drawn from a dictionary.

0 kommentar(er)

0 kommentar(er)